In this last and final post in the series (see Part 1, Part 2, Part 3), I will be discussing how to backup and restore your WSL data. After all, you are probably going to have some pretty cool things like AI projects, downloaded Ollama models, and the Open WebUI docker container buried in your WSL instance that you would be sad to lose. However, as I found out through experimentation, backing up your Ubuntu WSL instance isn’t as straightforward as you would think.

This post is going to walk you through the tools and scripts I use to back up WSL, not only just to protect my AI playground, but also my other personal files on my PC. The best part about my set up, is that it costs hardly anything. Matter of fact, I back up five computers to the cloud for less than $5 per month.

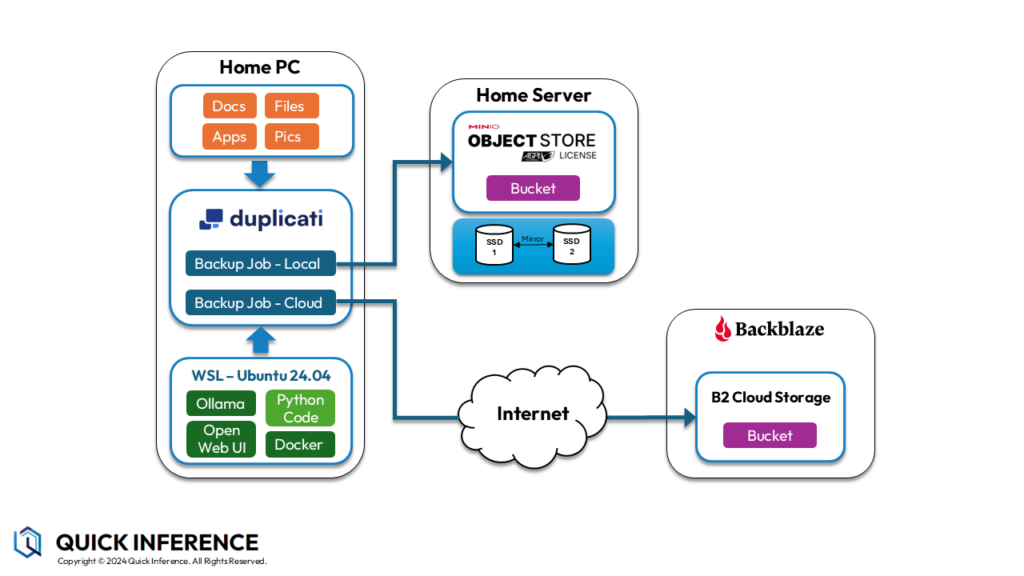

Solution Overview

For my personal back up solution, I use two open source software packages, plus a cloud storage provider. This allows me to protect my data both on-premises and to the cloud. While the on-prem target allows for fast backups and restores, the cloud provider protects against some sort of disaster happening to my house.

Duplicati

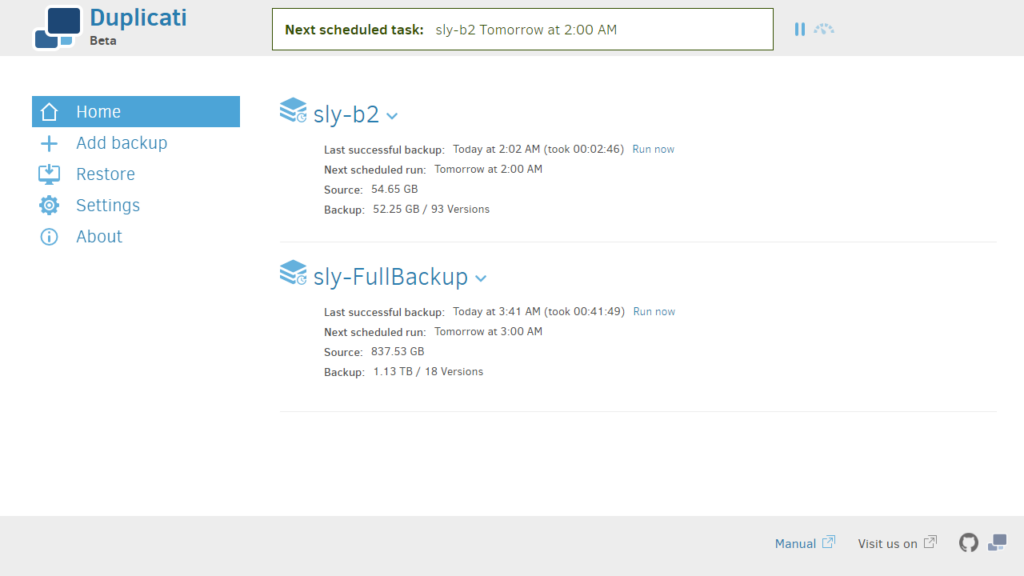

The cornerstone to my solution is a backup software named Duplicati. While the Duplicati team has a new low-price paid commercial offering, I have actually been using their open source Duplicati client for years. (Back in the day, when they were first getting started, I made a donation to the team to support their efforts.)

My favorite thing about Duplicati is that it can back your data up to many different types of targets. This includes local targets such as a file share, FTP, SFTP, WEBDAV, in addition to almost two dozen public cloud providers. While it does versioning like many backup software apps, it also does deduplication, compression, and encryption on the data before it is ever uploaded anywhere.

Backup Target #1 – MinIO (On-premises)

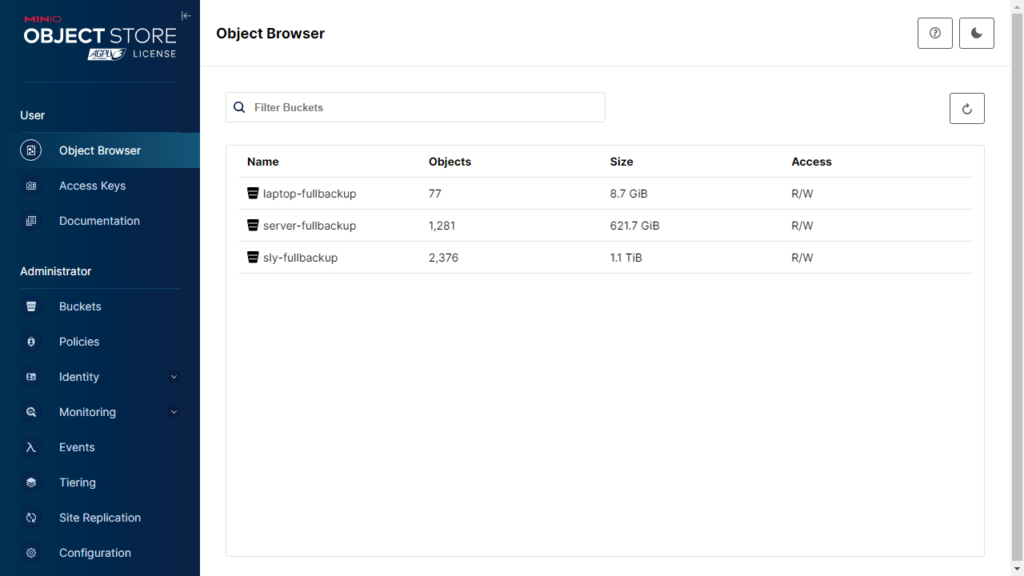

For the first backup target, I use an open source S3 compatible object storage application named MinIO running on my home server. (MinIO also has a commercial Enterprise-grade offering, but I use the free open source version from GitHub.) The great thing about the MinIO server, is that it can run on Windows, Linux, Mac, and also has a containerized version. Once it is installed, it has a nice web interface, and was really easy to create a dedicated bucket for each computer in my household that I am backing up.

With any backup strategy though, you need multiple layers of protection. For example, as an added layer, my MinIO buckets are actually stored on two mirrored 4 TB SSD drives. If working in the storage industry for 15+ years has taught me anything, it is that drives fail, usually at the worst possible moment. Actually, the whole reason I have the SSD drives in my server is because a spinning drive failed. After rotating out both of the old drives, my hope is that the SSDs are more resilient and can last longer than the 4 years the mechanical drives lasted.

As an added bonus, since Duplicati is backing my data up using an S3 compatible object storage protocol (and not say a file share), that gives me an added layer of protection against ransomware. Since most ransomware works by encrypting documents, pictures, and other important files on the local filesystem, it is unlikely any ransomware worm will have the smarts to traverse the network over the S3 protocol to access the files vaulted away on my home server. After all, it would need to know the server IP, bucket names, Access IDs, Access Keys, and the decryption password to get at things, so I feel pretty good about it.

Backup Target #2 – Backblaze B2 (Cloud Storage)

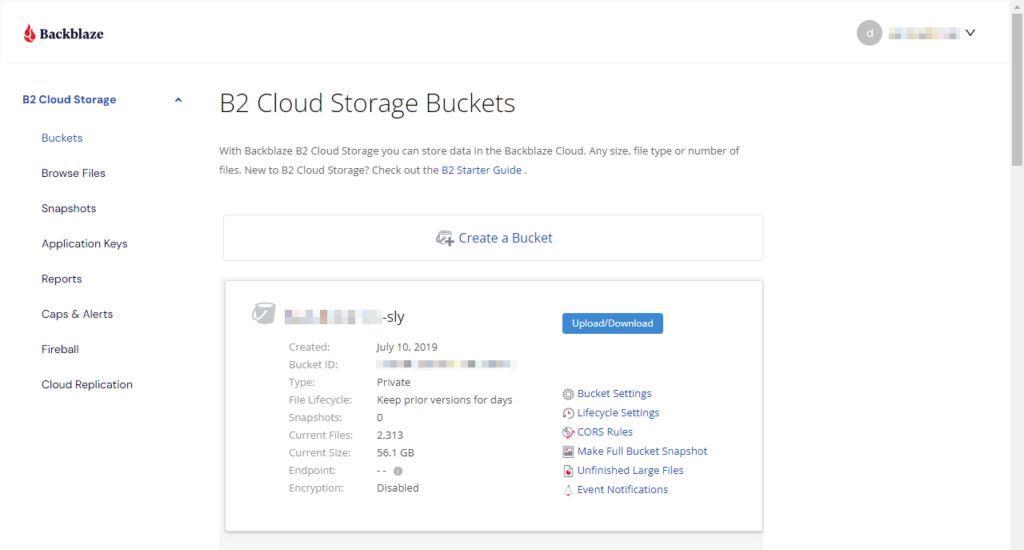

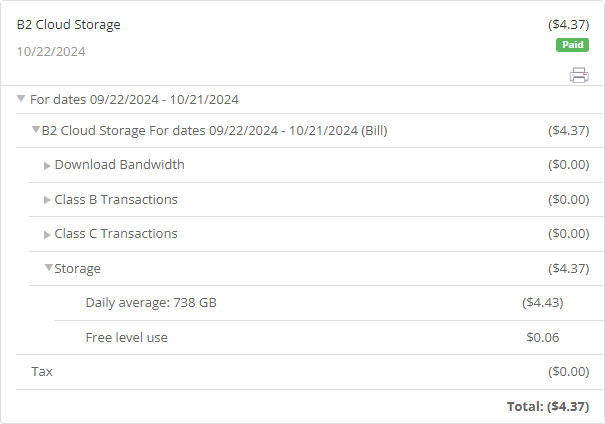

For cloud storage, Duplicati can back up to most all of the popular providers, including AWS S3, Box, Dropbox, Google Cloud Storage, Google Drive, SharePoint, Rackspace, and others. I chose Backblaze B2 because it has been the most cost-effective solution I have been able to find. As I am writing this, their pay-as-you-go plan only charges $6/TB/month.

Like I mentioned earlier, I back up just the important files from 5 computers to B2 storage totaling about 738 GB, and pay less than $5 per month.

Backing up WSL running Ubuntu

When I first started trying to backup my files stored in WSL, I tried a couple of different things. I tried storing my code within the Windows NTFS file system, and accessing it within Ubuntu, however, backups failed due to access permissions. While some have reported success with backing up through the “\\wsl$\Ubuntu-24.04\” network share, I was never able to get that working correctly.

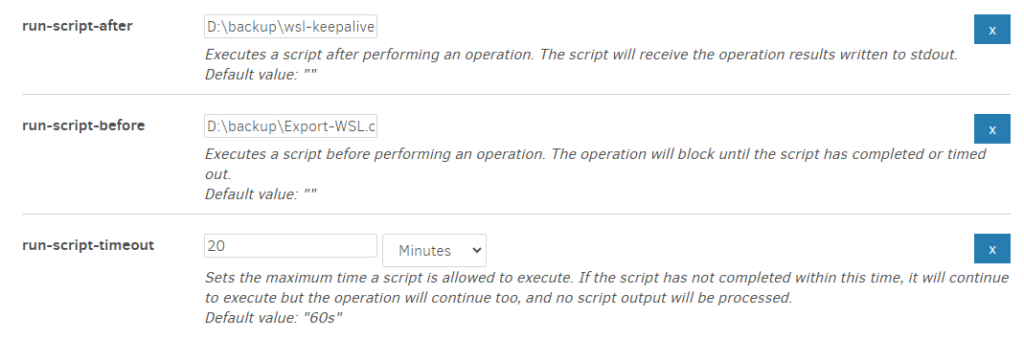

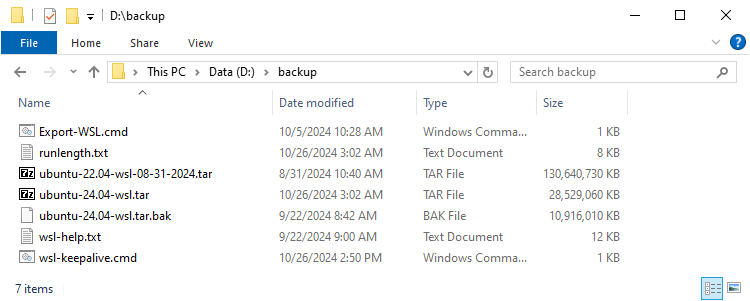

Instead, I decided just to run a couple of pre-backup scripts to export my WSL instance to be backed up with the rest of my computer’s files each night.

The main script runs on Windows with the backup job. In essence, it first calls a cleanup script within Ubuntu, then shuts down the WSL instance, exports it, outputs start and end times to a file, then exits with the error level.

Example of the Export-WSL.cmd file:

wsl -e bash /home/dschmitz/cleanup.sh

wsl --shutdown

echo Start time: %date% %time% >> D:\backup\runlength.txt

wsl --export Ubuntu-24.04 d:\mycode\ubuntu-24.04-wsl.tar >>D:\backup\runlength.txt

echo End time: %date% %time% >> D:\backup\runlength.txt

IF %ERRORLEVEL% NEQ 0 (

echo Error Level: %ERRORLEVEL% >> D:\backup\runlength.txt

)

echo. >> D:\backup\runlength.txt

exit /B 0What I discovered is that because I am using the “wsl –exec dbus-launch true” command to keep Ubuntu running all the time, there are some dbus temp files that need to be deleted before the backup starts in order to have a clean backup each night.

Here is what that cleanup script looks like that is called by the main script. (All it does it just deletes the dbus temp files, so that Duplicati doesn’t choke on them during the backup.)

Example of the /home/dschmitz/cleanup.sh file:

#/bin/bash

rm -rf /tmp/dbus*And of course, when the backup job is done, it will re-run the keep-alive script post-backup to re-start the WSL instance. (I detailed how to create that script in a previous post.)

Example of the wsl-keepalive.cmd file:

REM This script keeps the WSL Ubuntu instance running all the time

wsl --exec dbus-launch trueWhen finished, the Duplicati advanced options, including the 20 minute export timeout, look like this:

Recovering your WSL instance

Obviously, the best part about this setup is that you get a WSL do-over any time you need. For example, if you install a bad package, accidentally misconfigure something, or delete an important file, recovering your WSL instance is actually really easy. The best part is Duplicati will store multiple versions for you to restore from. Not to mention, the export is a simple tar file that can be opened with 7zip. (All you need to do is open the tar file, and extract anything you want.)

Much like how we moved the WSL instance in the previous post, we can recover from last night’s backup just as easily.

C:\Users\dschmitz>wsl --unregister Ubuntu-24.04

Unregistering.

The operation completed successfully.

C:\Users\dschmitz>wsl --import Ubuntu-24.04 c:\wsl\ubuntu-24.04 d:\backup\ubuntu-24.04-wsl.tar

Import in progress, this may take a few minutes.

The operation completed successfully.

C:\Users\dschmitz>wsl --set-default Ubuntu-24.04

The operation completed successfully.

C:\Users\dschmitz>Conclusion

In this final post of the series, I have showed how easy it to backup, not only your WSL instance, but your whole computer very easily and cost effectively. Hopefully this series has got you started down the exciting path of experimenting with AI projects.